Most of the development effort for FieldKit has been focused on the hardware, its firmware, and the app. There’s another component in the platform that hasn’t gotten much attention, because in the grand scheme of human undertakings it’s Just Another Website. It’s what we internally call the Portal.

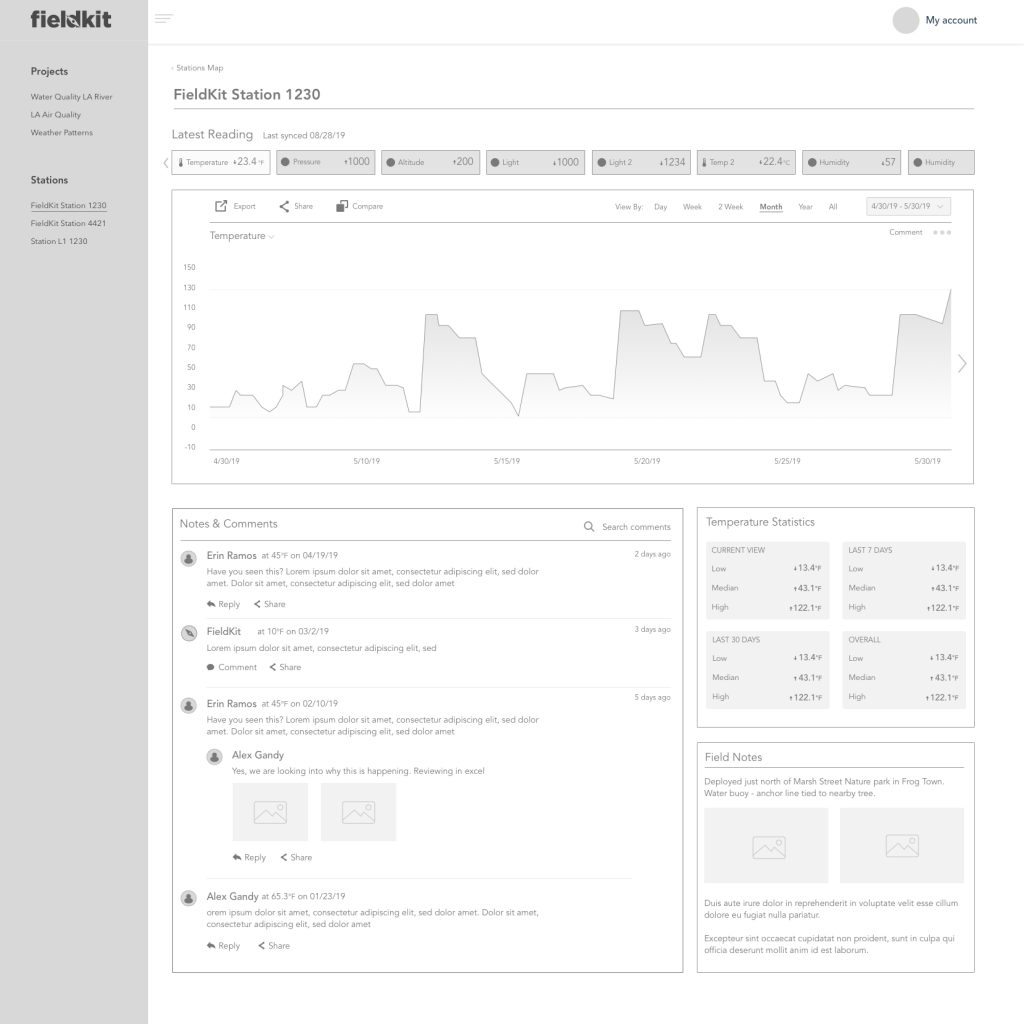

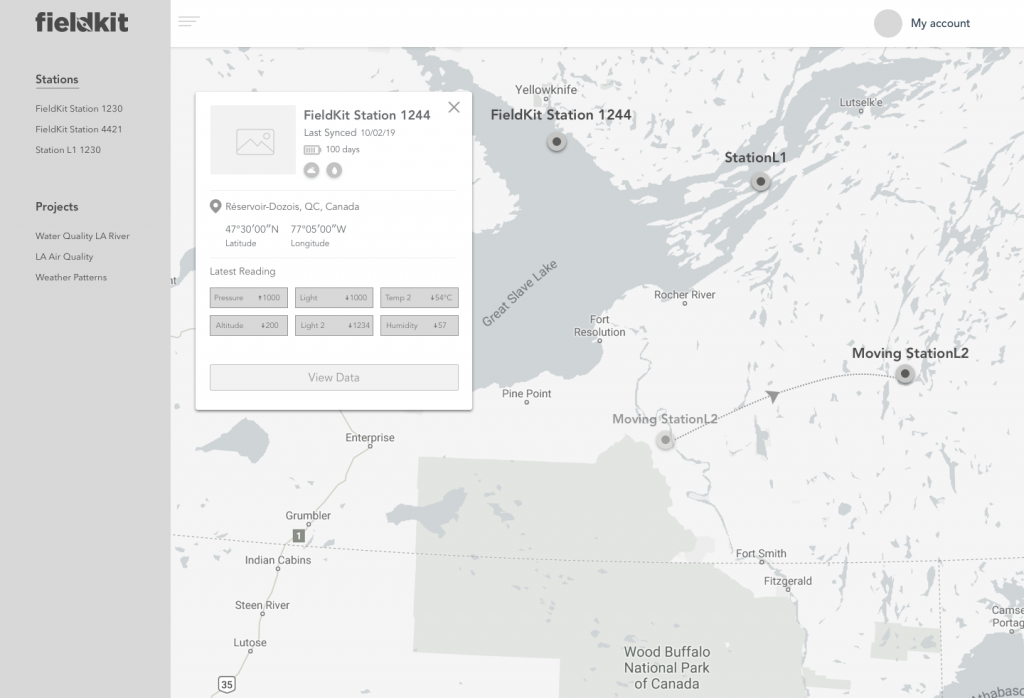

The portal is where users register and manage their accounts. It’s got tools for administrative tasks and listing, monitoring and cataloging their FieldKit stations. It’s reactive, but primarily meant to be consumed on a desktop because of the data visualization tools the portal provides for exploring the data streaming in from actively running FieldKit stations.

Approaching the development of the portal was done like most of the other efforts. Even though it’s the lowest priority, it’s grown as we’ve needed functionality to support the most important user stories being developed in the mobile app and the firmware. Things like authentication, accepting data from the app and WiFi enabled stations, querying of that data and basic visualization support to allow the team to monitor the data coming from the early hardware. Regardless, we started wire-framing the portal early on, as you can see in these images. Once the User Experience is dialed in and mostly settled, our talented designer(s) start to add color and apply our style guide.

From a technical perspective, the portal looks like any other modern site you’re likely to see:

- React/Vue. Work on the portal began several years ago and in the interim as our team as grown we’ve come to prefer VueJs for a number of reasons. Some political and some technical. Most of the administrative areas are React and will stay that way as long as their stable, but as new features and feedback from users comes in they’ll be rewritten and brought up to date with the more modern sections.

- Golang. Arguably an odd choice. Go is a great language though and while certainly prone to some verbosity it’s very fast (and getting faster) and extremely readable and accessible. We have a lot of internal tooling that’s also written in Go and having the easy ability to cross compile has been absolutely fantastic. For example we have several builds that produce binaries for darwin-amd64, linux-amd64 and linux-arm and being able to share tooling code with code that runs on the backend is very convenient.

- PostgreSQL. An easy choice. PostgreSQL has been a favorite database of many team members for years and continues to get better. Plus, with access to several time series and PostGIS extensions this was the clear winner. It’s also supported on RDS which, while more expensive, gives us some support we don’t need to stress about as much day to day.

- AWS. Probably one of the most controversial choices on the team. During our discussions about the company values we’ve talked at length about finding an alternative cloud provider that is more green and environmentally friendly. Unfortunately, our institutional knowledge and our use of Terraform for scripting the infrastructure meant we could be far more productive sticking with AWS in the near term.

One technical note that some readers may find interesting is that architecturally there’s actually two separate backend services. The first is monolithic and embodies what most people imagine as the backend. It implements the API, acting as the arbiter of state and business logic. The other, which we call the ingester, is extremely small and rarely modified. It’s job is to accept data from stations and apps in the field and store them on S3.

One of the main reasons the portal is a lower priority is that it’s the component with the fastest turnaround on development. Hardware has the longest cycle, followed by firmware and then the mobile app. Even though the mobile app is a hybrid and built on similar technology to modern websites, there’s still the process of running on phones and testing on multiple devices and platforms. Today’s web, thankfully, is a much more cohesive platform.