Increasing Camera Trap Efficiency with Machine Learning

BBC Wildlife Camera-trap Photo of the Year 2014

As machine learning becomes more and more ubiquitous, the development of smart devices and IoT that’s “on the edge” should become dramatically easier. Given this, I was interested in exploring applications for machine learning in conservation biology, specifically in animal recognition for camera trap images.

Camera traps, used by environmentalists, filmmakers, and researchers, are deployed into the wild to monitor animals. Triggered by infrared sensors, camera traps can detect movement and autonomously capture photos and videos of animals. This is a boon and curse for environmental monitoring because image results can vary widely, ranging from false positives to shots worthy National Geographic. Similarly, researchers may collect an overabundance image data or none at all. The variability in camera trap results makes image processing and deployment management an incredibly tedious process.

Using object detection and image classification models, we can make animal monitoring much more efficient. The range of efficiency, however, depends on how machine learning is applied. In this article, I will provide a brief introduction into two possible avenues to explore.

But before jumping in, I would like to first cover the limitations of machine learning for image processing. While it would be immensely convenient to use machine learning to identify species all over the world, there are simply too many species for one model to cover. The most practical application of machine learning would be to build an object detection model to identify the presence of animals in an image, and then complement the object detection model with a regional, geographically-specific image classification model. The results of object detection models for animals have been proven to be quite robust, reducing the time spent sifting through false positives. Furthermore, image classification models trained on smaller subsets of animals have also provided accurate results.

So, the first and most straightforward method of increasing animal recognition efficiency is to simply run camera trap images into an object detection and image classification model once all images are collected. In my time perusing the internet for available models, I found an open-sourced animal object detection model run on InceptionNet that was built by Microsoft’s AI for Earth project.

Unfortunately, I was unable to find any open sourced image classification models because of its lack of generalizability. There have been multiple research efforts examining the accuracy of animal classification, and results have shown that a model trained for a specific location will perform significantly worse when applied to a new geographic region. An example may be found here.

Most models were built to research image classification accuracy, trained for specific locations such as the American Midwest. When applied to a new location, image classification accuracy decreases significantly. If you are interested in training your own image classification model, you may find valuable datasets in Lila Science, a library for environment-related datasets. I would recommend using the datasets, Caltech Camera Traps or Snapshot Serengeti.

The second and more ambitious method is to build a camera trap “on the edge.” There are currently no camera traps that offer on-device machine learning, but it is quite easy to build a nascent one using accessible hardware.

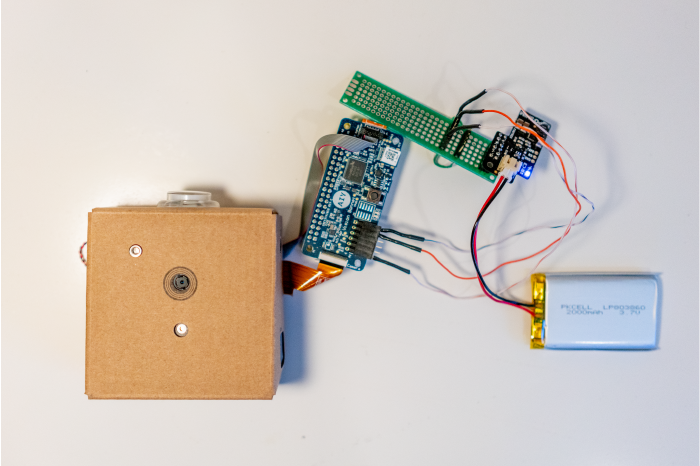

To test this possibility, I simply hacked a Google AIY vision kit, which comes with a Raspberry Pi, Vision Bonnet (Google’s ML processing board), Pi Camera, and other hardware pieces to tie it all together. To make it a real camera trap, I added my own PIR sensor that would trigger the camera to take an image and process it with a model.

On the software side, there were already a number of built in TensorFlow models that were fun to play with but not all that useful for building a camera trap to recognize animals. Nonetheless, I used TensorFlow’s image classification model to build a simple prototype and test functionality within the Conservify lab. To really build robust machine learning models “on the edge,” you can retrain the TensorFlow object detection model using SSD MobileNet with the same database that was used to build Microsoft’s model. Tutorials to learn how to retrain TensorFlow models are also available on Tensorflow’s website.

Beyond machine learning, there is also a big space for technology that allows for real time updates using tools like LoRa or cellular connection. Real time updates could help reduce the duration in which camera traps are deployed in the wild by notifying users when images have been taken. We at Conservify are currently experimenting with various types of communication hardware to tackle this problem. We’ll hopefully provide an article on this soon. In the meantime, have fun exploring! There is so much potential for innovation in the space of animal recognition and camera traps.